CrystalDiskMark has become a go-to utility for testing the speed of hard drives, solid-state drives (SSDs), USB sticks, and even NVMe storage. Designed with a simple interface and powerful backend, it provides quick access to benchmark results with minimal user effort. Whether you’re building a PC, optimizing web servers, or evaluating hardware for software development, CrystalDiskMark is often one of the first tools used to assess disk performance.

But a crucial question persists among both casual users and professionals: Do the results from CrystalDiskMark actually represent real-world performance? To answer this, we need to dig into what the tool measures, how it works, and how those figures compare to everyday usage.

Breaking Down the Metrics CrystalDiskMark Reports

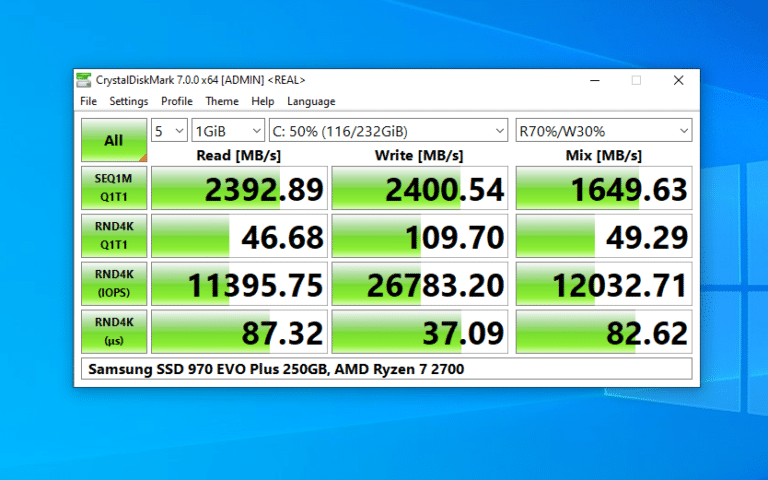

CrystalDiskMark provides four primary benchmarks:

- Sequential Read/Write (Q8T1, Q1T1)

- Random Read/Write (4KiB Q32T1, Q1T1)

- Mixed I/O Tests

- IOPS (Input/Output Operations Per Second)

Each result shows how the drive performs under specific conditions. Sequential read/write tests simulate transferring large files—ideal for video editing or moving ISO files. Random 4KiB read/write tests simulate typical system and application workloads where small files are accessed frequently, such as booting an OS or opening applications.

These results give users a controlled, repeatable way to measure and compare drive performance. However, these aren’t real-world workloads; they’re synthetic simulations.

Synthetic Benchmarks vs Real-World Usage

Synthetic benchmarks are designed to isolate variables and test specific capabilities of hardware components. This controlled environment is useful for understanding the maximum theoretical limits of a storage device. But real-world performance includes unpredictable factors like:

- OS overhead

- Background processes

- File system behavior

- Thermal throttling

- Storage interface bottlenecks (e.g., SATA vs NVMe)

- Application-specific workloads

For example, a drive that scores 5000 MB/s in sequential read might not load games or launch apps any faster than one scoring 3000 MB/s, depending on how those applications access data.

Where CrystalDiskMark Excels

Despite its limitations, CrystalDiskMark does provide meaningful insights when used correctly:

Storage Device Comparison

When comparing two SSDs—say, a Gen3 vs a Gen4 NVMe—CrystalDiskMark quickly highlights the theoretical performance gap, especially in sequential and random I/O.

Performance Consistency

Running the test multiple times can reveal thermal throttling or performance drops in lower-end SSDs. If the performance degrades in later tests, that’s a real concern in extended workloads.

Initial Validation

If you’ve just built a PC or installed a new drive, CrystalDiskMark confirms whether your hardware is configured properly. A Gen4 NVMe drive reporting SATA speeds? That’s a red flag you’d catch instantly.

Gaps Between Benchmark Scores and Real-World Experience

While CrystalDiskMark reports valuable stats, those numbers don’t always align with how the drive feels during use. Here’s why:

Queuing and Threading Differences

Real-world tasks don’t usually saturate multiple queues and threads like CrystalDiskMark does with Q8T1 tests. For instance, browsing the web or launching Photoshop doesn’t hammer your SSD with 8 simultaneous threads of sequential I/O.

File Size Variance

Most benchmarks use specific block sizes (e.g., 1MiB for sequential, 4KiB for random). In reality, file sizes vary widely. Games load textures, config files, and audio clips ranging from a few KBs to several MBs, making synthetic numbers less accurate.

Write Amplification and Caching

SSDs often use a fast cache (SLC buffer) for short bursts. CrystalDiskMark may report impressive write speeds while the cache is active, but sustained real-world writes might drop to much lower levels once the cache fills.

How Real-World Workloads Differ

Let’s walk through a few use cases to compare expectations based on CrystalDiskMark with actual outcomes.

Game Loading

You might see a 2TB NVMe SSD score 7000 MB/s in CrystalDiskMark. However, game loading times compared to a 3500 MB/s drive may differ by only 1-2 seconds in practice. That’s because games often involve random access and thousands of small files, not pure sequential reads.

Video Editing

For creators working with large raw video files, sequential performance metrics do come closer to real-world behavior. Moving 100 GB 4K footage across drives? Those 5000+ MB/s speeds show their value here.

Web Hosting

CrystalDiskMark may mislead in server scenarios. A web server running on SSD storage often benefits more from IOPS and random read speeds than sheer throughput. Serving many small files, handling HTTP requests, and managing database reads involve latency more than bandwidth.

Using CrystalDiskMark More Effectively

To bring benchmark results closer to real-world relevance, use these tips:

Run Multiple Passes

Repeat tests after several runs or under stress conditions (e.g., after the system has been on for hours) to evaluate thermal consistency.

Combine with Other Benchmarks

Use complementary tools like ATTO Disk Benchmark, AS SSD, or Anvil’s Storage Utilities for a broader understanding. Each one simulates different workloads.

Compare Against Real Tasks

Try timing actual file transfers or app launches and compare the results with your CrystalDiskMark readings. Look for correlation instead of assuming causation.

Professional and Enthusiast Opinions

Storage reviewers, system integrators, and IT admins generally agree on CrystalDiskMark’s strengths and limits:

- It’s a great visibility tool, especially for spotting performance tiers.

- It’s not meant to simulate OS, software, or enterprise use cases exactly.

- It offers easy sharing of performance data across forums and support platforms.

In tech review sites and forums, CrystalDiskMark scores are used alongside thermal imaging, real-world loading times, and database testing tools to form a comprehensive performance picture.

Interpreting Scores in Practical Context

Understanding what CrystalDiskMark numbers mean helps set realistic expectations:

- Sequential Read (Q8T1): Fast for large file transfers, relevant in content creation.

- Random Read (4KiB Q1T1): A good metric for OS snappiness and app launching.

- Write Speed Scores: Crucial for those who install or update apps frequently, or work with compression-heavy tasks.

- IOPS: Best indicator for database-heavy environments or rapid access to micro files.

By viewing scores this way, you’re more likely to see which scores apply to your specific workflows.

Case Study: Web Developers and CrystalDiskMark

Developers managing local environments with tools like Docker, Git, or Node.js often wonder if upgrading from a SATA SSD to NVMe will impact productivity. CrystalDiskMark shows an impressive jump in sequential speed—but Git operations or npm installs depend more on random small-file access and latency, not throughput.

As a result, while CrystalDiskMark reveals an upgrade, day-to-day gains may feel minimal unless you’re working with huge databases or compiling codebases like Chromium.

Alternatives That Reflect Real Usage Better

Here are tools and methods better aligned with specific real-world scenarios:

- PCMark 10 Storage Benchmarks: Runs common tasks like app launches, file copying, and media editing.

- Anvil’s Storage Utilities: More detailed latency and mixed I/O testing.

- Timer-based App Launch Tests: Manually timed Word/Photoshop/Game launches under stopwatch or automation scripts.

- DiskSpd (for Windows Servers): Useful for simulating enterprise loads in more granular conditions.

Conclusion

CrystalDiskMark is a powerful and lightweight benchmarking tool that delivers quick insight into your storage device’s raw performance. It’s great for comparisons, troubleshooting, and initial evaluations—but don’t rely on it as a crystal ball for everyday speed.

To truly measure how fast your storage feels in real use, combine it with app-based testing, OS monitoring, and hands-on timing. Use CrystalDiskMark as a starting point—not the final word.